Grafana Enterprise Metrics configuration

Grafana Enterprise Metrics utilizes a configuration file that is a superset of the Grafana Mimir configuration file.

The following extensions are available in the Grafana Enterprise Metrics configuration:

Authentication

You can enable the built-in, token based authentication mechanism in Grafana Enterprise Metrics by adding the following code to your configuration file:

auth:

type: enterpriseAdmin backend storage

You need to configure Grafana Enterprise Metrics with a bucket that stores administration objects such as clusters, access policies, tokens, or licenses. Ideally, this bucket is separate from the bucket that stores the TSDB blocks.

If you do not configure the object-storage bucket or do so incorrectly, Grafana Enterprise Metrics is unable to find its license and warnings will be logged. Failure to find a license is not a fatal error to minimize operational disruption in the case of misconfiguration or license expiry.

The client that is used is configured using the same configuration options as configuring a blocks storage backend. All of the clients supported for blocks storage are also supported for the admin API.

To verify that you have configured the object-storage bucket correctly, see either the S3 / Minio backend if you are using an S3 compatible API, or Google Cloud Storage (GCS) backend if you are using Google Cloud Storage.

Below are snippets that can be included in your configuration to set up each of the common object storage backends:

S3 / Minio backend

admin_client:

storage:

type: s3

s3:

# The S3 bucket endpoint. It could be an AWS S3 endpoint listed at

# https://docs.aws.amazon.com/general/latest/gr/s3.html or the address of an

# S3-compatible service in hostname:port format.

[endpoint: <string> | default = ""]

# S3 bucket name

[bucket_name: <string> | default = ""]

# S3 secret access key

[secret_access_key: <string> | default = ""]

# S3 access key ID

[access_key_id: <string> | default = ""]

# If enabled, use http:// for the S3 endpoint instead of https://. This could

# be useful in local dev/test environments while using an S3-compatible

# backend storage, like Minio.

[insecure: <boolean> | default = false]Google Cloud Storage (GCS) backend

admin_client:

storage:

type: gcs

gcs:

# GCS bucket name

[bucket_name: <string> | default = ""]

# JSON representing either a Google Developers Console client_credentials.json

# file or a Google Developers service account key file. If empty, fallback to

# Google default logic.

[service_account: <string> | default = ""]Admin backend storage cache

For Admin backend storage, you can configure an external cache. By using data stored in the cache, Grafana Enterprise Metrics can continue to run during an object storage outage.

You can either use Memcached or Redis. Memcached is fully supported if you are using the mimir-distributed Helm chart. You have to deploy and configure Redis if you want to use it because it is not installed by the Helm chart.

Memcached

admin_client:

storage:

# (advanced) Enable caching on the versioned client.

# CLI flag: -admin.client.cache.enabled

[enable_cache: <boolean> | default = true]

cache:

# Cache backend type. Supported values are: memcached, redis, inmemory.

# CLI flag: -admin.client.cache.backend

backend: memcached

memcached:

# Comma-separated list of memcached addresses. Each address can be an IP

# address, hostname, or an entry specified in the DNS Service Discovery

# format.

# CLI flag: -admin.client.cache.memcached.addresses

[addresses: <string> | default = ""]

# (advanced) How long an item should be cached before being evicted. Only

# available for remote cache types (memcached, redis).

# CLI flag: -admin.client.cache.expiration

[expiration: <duration> | default = 24h]Redis

admin_client:

storage:

# (advanced) Enable caching on the versioned client.

# CLI flag: -admin.client.cache.enabled

[enable_cache: <boolean> | default = true]

cache:

# Cache backend type. Supported values are: memcached, redis, inmemory.

# CLI flag: -admin.client.cache.backend

backend: redis

redis:

# Redis Server or Cluster configuration endpoint to use for caching. A

# comma-separated list of endpoints for Redis Cluster or Redis Sentinel.

# CLI flag: -admin.client.cache.redis.endpoint

[endpoint: <string> | default = ""]

# (advanced) How long an item should be cached before being evicted. Only

# available for remote cache types (memcached, redis).

# CLI flag: -admin.client.cache.expiration

[expiration: <duration> | default = 24h]Blocks storage

Grafana Enterprise Metrics extends Grafana Mimir’s blocks storage configuration by those features:

Rate limiting of list calls

List calls to a storage bucket can be rate limited. The blocks-storage.bucket-rate-limit.limit configuration option specifies how often per second a list call can be made before being rate limited. The blocks-storage.bucket-rate-limit.burst configuration option specifies the burst size. If blocks-storage.bucket-rate-limit.limit is smaller than or is equal to zero (the default) no rate limiting is applied. The snippet below shows how this can be set in your configuration file for an S3 backend:

blocks_storage:

backend: s3

bucket_rate_limit: 100

bucket_rate_limit_burst: 1Compactor

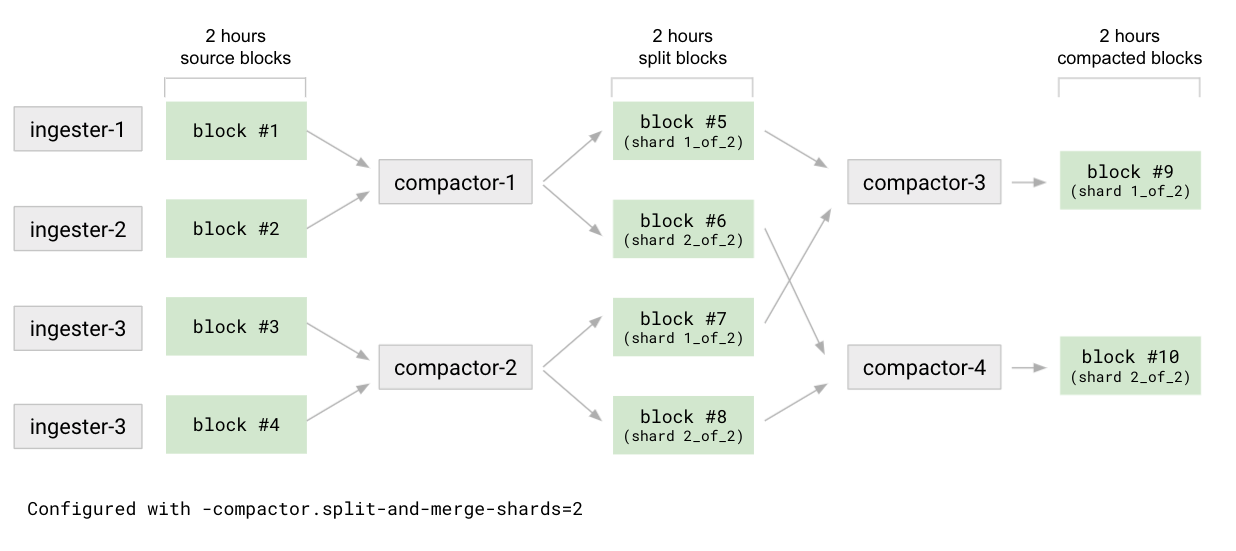

Grafana Enterprise Metrics uses “split-and-merge” compactor. This is a new compactor that allows the user to vertically and horizontally parallelize compaction for a single tenant. This is useful for metrics clusters with very large tenants.

split-and-merge compaction also allows GEM to overcome TSDB index limitations and prevent compacted blocks from growing indefinitely for a very large tenant (at any compaction stage).

split-and-merge compaction is a two stage process: split and merge.

For the configured 1st level of compaction (eg. 2h), the compactor divides all source blocks into N groups. For each group, the compactor compacts together the blocks, but instead of returning 1 compacted block (as with the default strategy), it outputs N blocks, called split blocks. Each split block contains a subset of the series. Series are sharded across the N split blocks using a stable hashmod function. At the end of the split stage, the compactor will have produced N * N blocks with a reference to their shard in the block’s meta.json.

Given the split blocks, the compactor runs the merge stage which compacts together all split blocks of a given shard. Once this stage is completed, the number of blocks will be reduced by a factor of N. Given a compaction time range, we’ll have a compacted block for each shard.

The merge stage is then run for subsequent compaction time ranges (eg. 12h, 24h), compacting together blocks belonging to the same shard (not shown in the picture below).

The N number of split blocks is configurable on a per-tenant basis (-compactor.split-and-merge-shards) and can be adjusted based on the number of series of each tenant. The more a tenant grows in terms of series, the more you can grow the configured number of shards, in order to improve compaction parallelization and keep each per-shard compacted block size under control. We currently recommend 1 shard per 25-30 million active series in a tenant. This means that for a tenant with 100 million active series you would set split-and-merge-shards=4. Note: This recommendation may change as this feature is still experimental.

Vertical scaling with split-and-merge compaction

To get vertical scaling use the -compactor.compaction-concurrency flag. The compaction-concurrency flag is the max number of concurrent compactions allowed to run in a single compactor replica (each compaction uses 1 CPU core).

Horizontal scaling with split-and-merge compaction

By default, GEM spreads compaction of tenant blocks across all compactors.

You can enable compactor shuffle sharding by setting compactor-tenant-shard-size to a value higher than 0 and lower than the total number of compactors.

When you enable compactor shuffle sharding, only the specified number of compactors compact blocks for a given tenant.

Vertical and horizontal scaling both accomplish the same thing - allowing you to speed up compaction by parallelizing it either across multiple compactor replicas (horizontal scaling) or across multiple CPUs on the same compactor replica (vertical scaling). The operator can choose to use neither, one, or both types of scaling.

Below is a sample configuration for enabling split-and-merge compaction in the compactor:

compactor:

# Enable vertical scaling during the split-and-merge compaction.

# A single compactor replica can execute up to compaction_concurrency non-conflicting

# compaction jobs for a single tenant at once (in this example, 4 jobs at once).

compaction_concurrency: 4

limits:

# Number of shards per tenant

compactor_split_and_merge_shards: 2

# Number of compactor replicas that a tenant's compaction jobs can be shared across

compactor_tenant_shard_size: 2How does the compaction behave if -compactor.split-and-merge-shards changes?

In case you change the -compactor.split-and-merge-shards setting, the change will affect only compaction of blocks which haven’t been split yet. Blocks which have already run through the split stage will not be split again to produce a number of shards equal to the new setting, but will be merged keeping the old configuration (this information is stored in the meta.json of each split block).

Ruler

The Ruler is an optional service that executes PromQL queries in order to record rules and alerts. The ruler requires backend storage for the recording rules and alerts for each tenant.

Grafana Enterprise Metrics extends Grafana Mimir’s upstream ruler configuration by those features:

Remote-write forwarding

The following configuration options can be used to enable remote write rule groups and specify a desired directory to store the generated write-ahead log (WAL). To learn more see the remote-write rule forwarding documentation

ruler:

remote_write:

enabled: <bool. defaults to false>

wal_dir: <directory path. defaults to ./wal>Remote rule evaluation using query-frontend

To authorize communication when configuring the ruler to use query-frontend for remote rule evaluation, an authorization token must be specified by setting -ruler.query-frontend.auth-token option.

During remote evaluation the ruler sets the tenant when making a request to the query-frontend based on which tenant the rulegroup is meant to query against. This means that the authorization token must be associated to an access policy that has metrics:read access to all tenants, since a rulegroup could potentially be associated with any tenant in the cluster.

An access policy that fits these requirements must have scope metrics:read and realm->tenant set to the wildcard character (*). For more information on how to do this, please see Create access policy.

Gateway

For information about the gateway and its configuration, refer to Gateway.

Was this page helpful?

Related resources from Grafana Labs